Have you ever had a need for a dataset that doesn’t easily exist? Wanted to easily generate data that matches your exact requirements for interviewing prospective data science candidates, software testing + development, or training models? Or what about just wanting the right data to use to demonstrate skills + techniques for a Medium article (that doesn’t violate copyright laws)?

Enter dummy data! 📊 ✨

Until recently, creating dummy datasets was somewhat tedious and arduous, the technical folks among us could generate it with expertly written python code, but coding up all your requirements by hand can be time intensive and has a high technical barrier to entry.

Let’s say we have a use case where we want to test a candidate applying for data science to a fintech, and there are real world patterns we want them to be able to identify and discuss, but for privacy reasons we cannot share actual customer transaction data externally.

The solution? Leverage the power of Generative AI to expertly craft complex python code to output our ✨Designer Dummy Datasets✨

Let’s look at how we can prompt GPT4 to generate a dataset for us that meets all of our exact, and somewhat tedious, requirements:

Hi there! You are my expert python programmer and data scientist extraordinaire.

I need to generate a "designer dummy dataset" that meets the following conditions and specifications,

can you please write the python code for me to generate it?

The dataset is transactions in 2019, 2020, and 2021

I want the dataset to contain the following columns: id, transaction_timestamp, user_id, amount, merchant, network, card_type.

The merchant_name should be either: Walmart, Netflix.com, Starbucks, Home Depot, 7/11, Dunkin Donuts, Trader Joe's, and Amazon.com

The user_id should be between 1 and 100 - the amount should be 9.99 for every Netflix.com purchase, less than $10 for Starbucks and Dunkin Donuts, between $25 and 500 for Walmart, Amazon.com, and Home Depot, less than $25 for 7/11, and between $10 and $250 for Trader Joe's

There should be 1000 transactions in 2019, and then it should increase by 100% in 2020, and 150% in 2021. Card_network should be visa or mastercard, and card_type should be credit or debitSee above, we’re leveraging the art of prompt engineering to get ChatGPT to write exactly what we want.

First, make sure you have all of the python libraries installed on your computer. Although this tutorial doesn’t require extensive Python knowledge, you will have to run ChatGPT’s Python code in eg. a Jupyter Notebook to get the output, and thus require the requisite packages.

pip install pandas

pip install numpyAnd now we’re ready to run + test the code Chat GPT generated for us to create our dummy dataset.

import pandas as pd

import numpy as np

import random

from datetime import datetime, timedelta

# Set random seed for reproducibility

random.seed(42)

np.random.seed(42)

# Define the function to generate the dataset

def generate_transactions(start_year=2019, end_year=2021, initial_transactions=1000):

years = range(start_year, end_year + 1)

growth_rate = [100, 150] # Percentage increase for 2020 and 2021 respectively

merchant_names = ['walmart', 'netflix.com', 'starbucks', 'home depot', '7/11', 'dunkin donuts',

'trader joe's', 'amazon.com']

card_networks = ['visa', 'mastercard']

card_types = ['credit', 'debit']

transactions = []

for year in years:

num_transactions = initial_transactions

if year > start_year:

increase = growth_rate.pop(0) / 100

num_transactions = int(num_transactions * (1 + increase))

for _ in range(num_transactions):

user_id = np.random.randint(1, 101)

merchant = random.choice(merchant_names)

network = random.choice(card_networks)

card_type = random.choice(card_types)

date = datetime(year, np.random.randint(1, 13), np.random.randint(1, 29))

timestamp = date.strftime('%Y-%m-%d %H:%M:%S')

if merchant == 'netflix.com':

amount = 9.99

elif merchant in ['starbucks', 'dunkin donuts']:

amount = round(random.uniform(1, 10), 2)

elif merchant in ['walmart', 'amazon.com', 'home depot']:

amount = round(random.uniform(25, 500), 2)

elif merchant == '7/11':

amount = round(random.uniform(1, 25), 2)

else: # trader joe's

amount = round(random.uniform(10, 250), 2)

transactions.append([timestamp, user_id, amount, merchant, network, card_type])

initial_transactions = num_transactions

df = pd.DataFrame(transactions, columns=['transaction_timestamp', 'user_id', 'amount',

'merchant', 'network', 'card_type'])

df.insert(0, 'id', range(1, 1 + len(df)))

return df

# Generate the dataset

df_transactions = generate_transactions()

# Show the first few rows of the dataset to verify

df_transactions.head()

Now, let’s double check that this dummy dataset actually meets the requirements of what we specified; if we’re to be asking a prospective Data Science candidate to analyze and find trends, we want the trends to actually be there. So let’s ask ChatGPT for the python code to check our requirement for percentage increase (indicating the company growth):

# Calculate total transaction counts for each year and the percentage change

transaction_counts = df_transactions['transaction_timestamp'].apply(lambda x: datetime.strptime(x, '%Y-%m-%d %H:%M:%S').year).value_counts().sort_index()

# Calculate percentage change

percentage_change = transaction_counts.pct_change().fillna(0) * 100

# Combine into a single DataFrame

summary = pd.DataFrame({'Total Transactions': transaction_counts, 'Percentage Change (%)': percentage_change}).reset_index()

summary

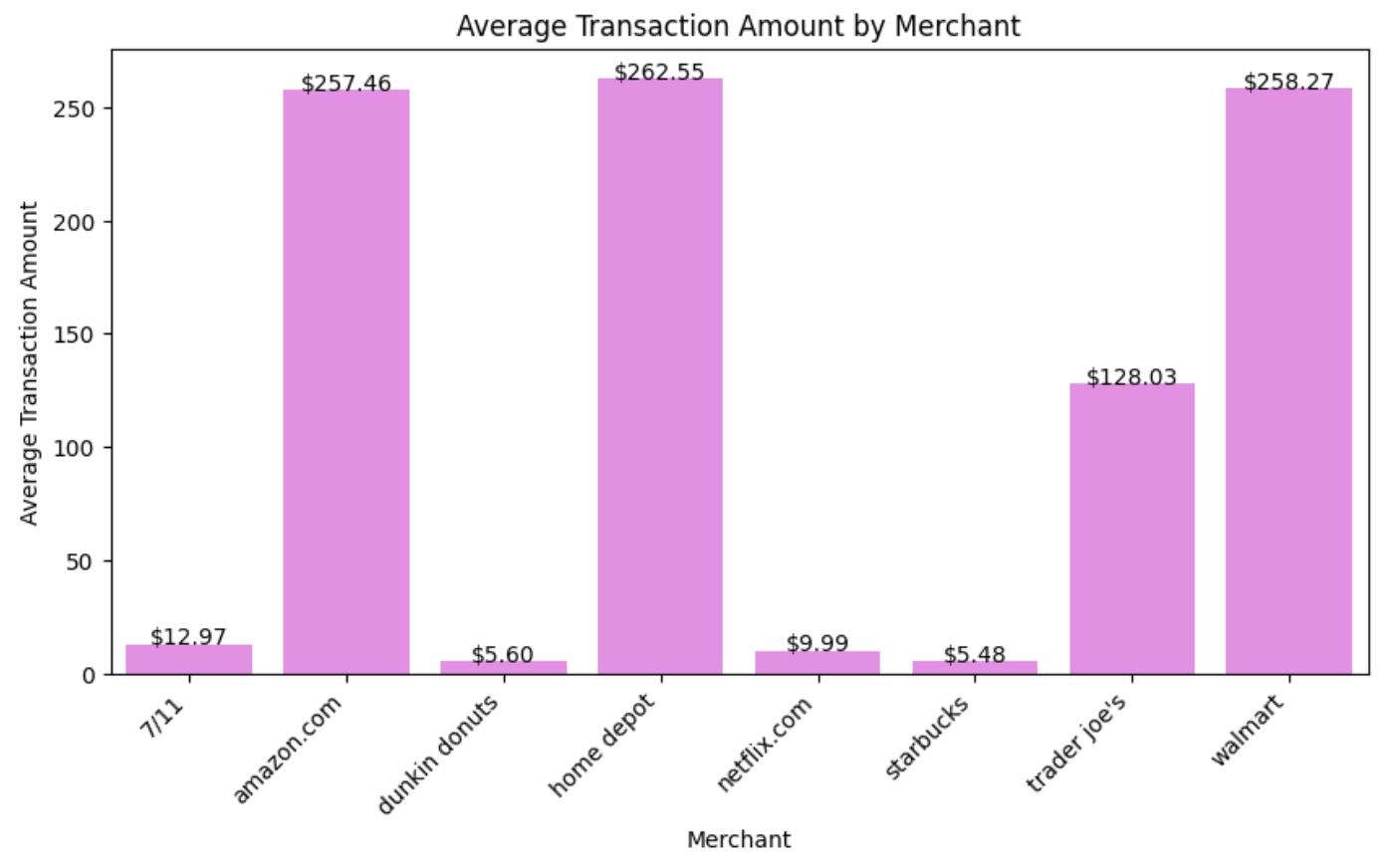

Below, you can see the results of what we might receive from a prospective Data Analyst or Data Science applicant who is analyzing our dummy dataset, looking for the exact trends and findings that we explicitly hid inside it.

(Note we didn’t include data quality issues in this "designer dummy dataset" but you absolutely could add that into your prompt, if you wanted to create a need to correct datatypes, handle nulls, or catch outliers!)

There are a variety of applications, outside of interviewing candidates, where this method is useful! As a prospective data science candidate myself, I’ve found it enormously helpful to generate dummy datasets related to the companies I am applying to ahead of any technical screens to get a sense of how to work with that industry’s data.

And within a data organization, designer dummy datasets can be used in a variety of scenarios! Top of mind for me as a former data engineer at Chime are:

- Creating dummy data to test data anonymization tools (you don’t want to expose real customer PII during your development process!)

- Testing data pipelines – we want to check the reliability, efficiency, and accuracy of data pipelines, and easily model scenarios. Creating specific datasets allows you to do this with ease!

- Validating data quality assurance processes by generating data that has specific errors to check your processes catch and alert correctly.

And for data science writers on Medium, this technique could be invaluable for generating realistic, copyright and privacy free datasets that allow you to show off your data viz skills!

Limitations + Considerations

While Genai can be extremely powerful and useful for generating these dummy datasets, it’s important to recognize there are limitations to their applications, especially when created from human input like we are doing here. Your prompts will never fully capture the accuracy, realism, and oftentimes "messiness" of real consumer datasets, and therefore a method like this isn’t a replacement for real training data for machine learning models, no matter how complex your prompts are.

Bias. Since we as humans are writing these prompts, any datasets generated may contain inherent biases that we, as the writer, hold. It’s important to take that into consideration when generating "dummy datasets" that your data doesn’t discriminate against people or places. Keep this in mind whenever generating your datasets!

As you can see in this brief tutorial, GPT4 is an incredibly powerful tool for creating custom datasets that meet strict and complex requirements, by leveraging its next level coding abilities and your own prompt engineering skills, you can create incredibly detailed and intricate data for a variety of purposes. This is just the beginning!

Thank you for reading, and please check out my other articles if you’re interested in data + AI! 👇