Despite many speculating whether ChatGPT will have replaced Google by now around this time last year, it clearly hasn’t happened yet. With Google releasing ChatGPT’s competitor Gemini two weeks ago, I am unsure if this is still a valid concern.

The release of ChatGPT in late 2022 has skyrocketed the general interest in Artificial Intelligence (AI) and especially Generative AI in 2023.

You might have heard of the term "AI winter" before – describing a period of reduced interest and, thus, funding in AI. Well, this year, we have experienced a true "AI summer" with venture capital firms investing over $36 billion into Generative AI, according to The Economist.

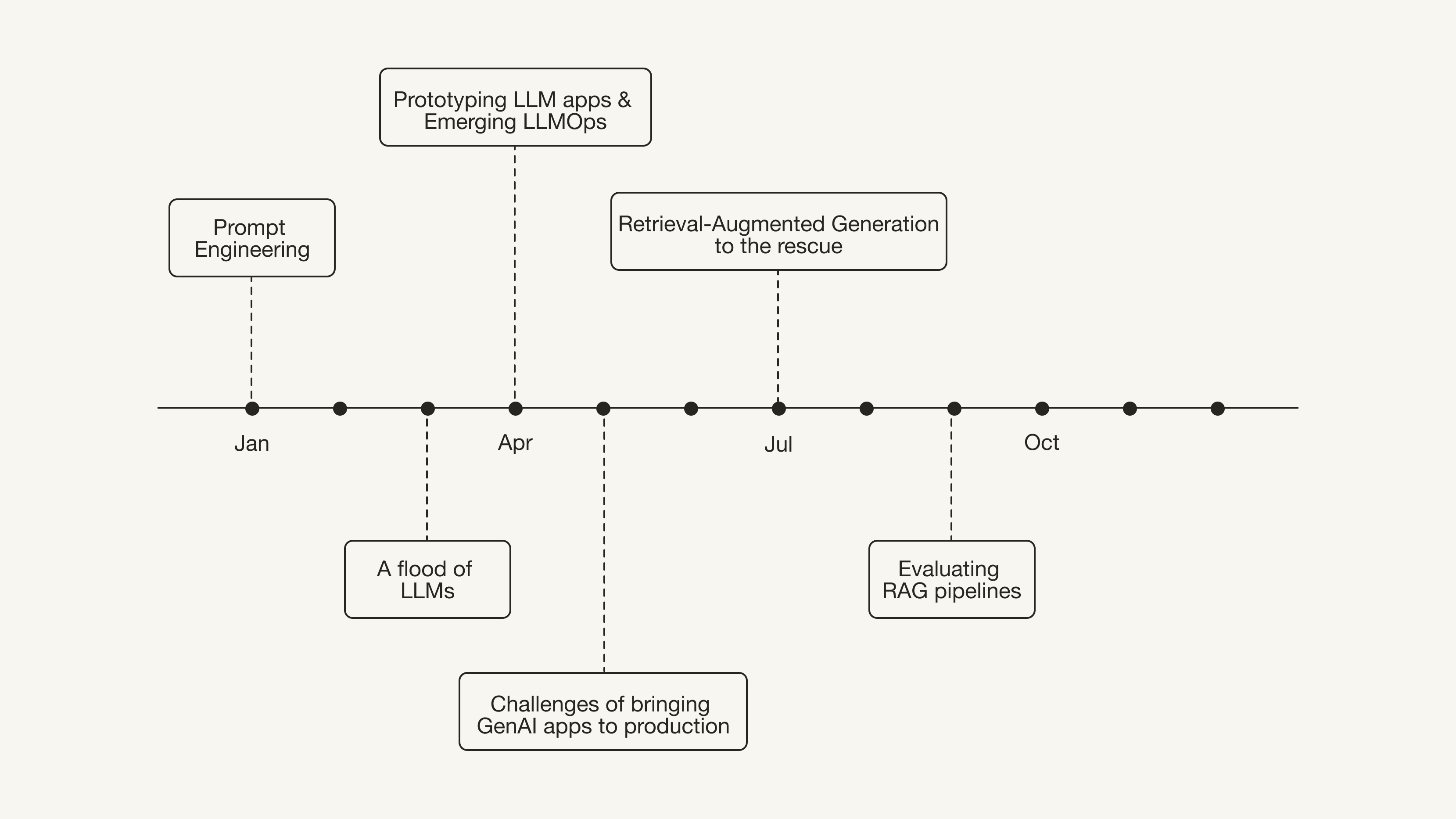

In January, people started playing around with ChatGPT and understanding its capabilities. Soon, they realized that how you phrase your question (the "prompt") resulted in different answers (the "completion"). This realization led to an increased interest in „prompt engineering" and its best practices.

However, while the general public was still amazed by ChatGPT’s capabilities and exploring different prompt techniques, researchers were not resting: In the first quarter of 2023, many people in the AI space felt they couldn’t keep up with the speed of advancements in AI research: The release of LLaMA by Meta at the end of February was followed by the releases of Jurassic-2 by AI21 Labs, GPT-4 by OpenAI, Claude by Anthropic, Falcon-40B by Technology Innovation Institute, Bard by Google, and BloombergGPT by Bloomberg L.P. in March.

Around spring 2023, developers were done playing around with ChatGPT. They had collected various ideas about what you could build with LLMs and were ready to get hands-on with projects such as cover letter generators, YouTube summarizers, customized question-answering chatbots, and so on.

In contrast to traditional Machine Learning (ML) models, Large Language Models (LLMs) – or foundation models (FMs) – enable developers to access their capabilities through a simple API call. These FMs and their accessibility changed how developers build AI-powered applications.

LLMs changed the way we build AI-powered applications.

With a new way to build AI-powered applications quickly, a new landscape of developer tools started to emerge around March and April, coining a new term: LLMOps.

LLMOps is similar to MLOps, helping developers along the development lifecycle of a LLM-powered application. However, since building applications with LLMs is different from traditional AI-powered applications, new developer tools quickly emerged:

- Frameworks like LangChain and LlamaIndex gained massive interest. These frameworks enabled developers to build apps with LLMs quickly and modularly.

- Vector databases have been around for a while, enabling semantic search. However, developers realized they could also be used to supercharge LLM applications with any external data.

As early as April of this year, practitioners started sharing their experiences and challenges when bringing their LLM applications to production. In her popular blog post "Building LLM applications for production," Chip Huyen discussed challenges like cost, latency, hallucinations, and backward and forward compatibility.

"It’s easy to make something cool with LLMs, but very hard to make something production-ready with them." – Chip Huyen in Building LLM applications for production

In this popular blog post from July, Eugene Yan outlines seven "Patterns for Building LLM-based Systems & Products." The three key patterns that stood out to me in 2023 were fine-tuning, retrieval-augmented generation, and evaluations:

As fine-tuning is the go-to technique to teach a neural network new knowledge from a data scientist’s perspective, fine-tuning LLMs was the first popular approach to giving general-purpose LLMs access to proprietary or domain-specific data. Especially with the release of open-sourced LLMs, such as LLaMa-2 [3], and efficient fine-tuning techniques for LLMs, such as QLora [1], fine-tuning LLMs has become more accessible to practitioners.

The concept of Retrieval-Augmented Generation (RAG) became a hot topic around July 2023, although the original Retrieval-Augmented Generation [2] paper had already been published in 2020.

Its popularity was because RAG enabled developers to separate the knowledge from the LLM’s reasoning capabilities and store them in an external database that was easier to update than when using fine-tuning.

Retrieval-Augmented Generation (RAG): From Theory to LangChain Implementation

Around autumn and winter, we have started to see practitioners now discussing the limitations of RAG pipelines and their solutions and evaluations.

While some look back at 2023 and are surprised how few LLM-powered applications emerged, this is probably because we weren’t expecting it to be this challenging.

While there have been a few Generative AI applications, such as Amazon’s product summaries feature, many companies are still experimenting and evaluating their solutions before they feel confident releasing them.

In this sense, you can view 2023 as the year of experimentation and getting to know Generative AI.

What to expect for 2024

However, 2024 should revolve a lot around successfully putting Generative AI solutions into production – and thus, we should encounter more LLM-powered features in the wild.

How to evaluate and monitor LLM-powered applications for and in production could become a hotter topic of discussion, with many frameworks, metrics, and paradigms evolving. As we will learn how to properly measure the performance of RAG, question-answering systems, and chatbots, we will probably also find new techniques to improve the performances as well.

And as the performance of these Generative AI systems matures, we can expect to see more and more Generative AI applications help us boost productivity and improve customer experiences.

As we will gain more experience with Generative AI solutions generating information on sensitive information, such as personal or proprietary data, I can imagine the topic of mitigating data leakage and protecting sensitive data become more important than before.

One thing is certain: You can expect to see a lot of movement happening in 2024. As this field is quickly evolving, even the experts in this field have just recently learned about a lot of these techniques. Thus, if you are interested in this field, now is a great time to gather experience for later.

"[…I]f you join in now, you can be there from the very beginning and become an expert. Even the experts in this field have only started exploring it recently, so the barriers to entry are low. By […] starting to build apps with LLMs, you’ll have a big advantage when the industry matures" – Darek Kleczek in "Building LLM-Powered Apps"

This was a review of 2023 from a technical point of view. If you are interested in a more economical view of what happened in the Generative AI space in 2023, I highly recommend this piece:

This article mainly focused on the Generative AI niche, which is only a small fraction of the overall AI space. There have been a lot of active discussions and exciting advancements in other areas, such as AI ethics and AI regulation, and Green AI (making AI models smaller and more efficient and thus sustainable).

I am curious to hear your predictions for the AI space in 2024!

Enjoyed This Story?

Subscribe for free to get notified when I publish a new story.

Find me on LinkedIn, Twitter, and Kaggle!

References

Literature

[1] Dettmers, T., Pagnoni, A., Holtzman, A., & Zettlemoyer, L. (2023). Qlora: Efficient finetuning of quantized llms. arXiv preprint arXiv:2305.14314.

[2] Lewis, P., et al. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. Advances in Neural Information Processing Systems, 33, 9459–9474.

[3] Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y., … & Scialom, T. (2023). Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288.

Images

If not otherwise stated, all images are created by the author.