Hands-on Tutorials

A comparison of two different methods designed for either simplicity or speed

Graph-enabled data science and machine learning has become a very hot topic in a variety of fields lately, ranging from fraud detection to knowledge graph generation, social network analytics, and so much more. Neo4j is one of the most popular and widely-used graph databases in the world and offers tremendous benefits to the data science community. And while Neo4j comes with some training graphs baked into the system, at some point the data scientist will want to populate it with their own data.

The easiest format for Neo4j to ingest data from is a Csv. A web search for how to populate the database reveals several potential methods, but I will focus in this post on two of the most common and most powerful ones, when you might want to consider each, and walk through some examples on how to use them.

The methods we are going to go through are

LOAD CSV: a simple method when the graphs are small- Neo4j administration tool: a fast method for when the graphs get large

I will demonstrate both of these in this post and talk about when you might want to use each.

Necessary tools

In order to get started, we will need to have Neo4j on our host computer. You can walk through the data loading examples below using the Neo4j Desktop, which provides a nice UI and is a great place to learn how to work with the database.

However, for the sake of this tutorial I have elected to use a simple Docker container for a few reasons.

First, containers are cool. I always mess stuff up and this is a very safe way to not ruin everything.

Second, so much data science happens in Docker containers these days that it just makes sense to think of Neo4j as being in a container as well.

Lastly, reproducibility is extremely important in data science, so using a container will allow for this.

All of that being said, you will need the following to run though the examples below:

- Docker (installation instructions can be found here)

- A Neo4j Docker image (I will be using

neo4j:latest, which at the time of writing this is version 4.2.2) - A data set in CSV format

For the data set, I am going to demonstrate data loading using the popular Game of Thrones graph that is available from this repository maintained by Andrew Beveridge.

One reason for using this graph as a walk through is that the data is formatted nicely and is reasonably clean – attributes that you will discover are quite helpful when loading in your data! Even still, we will wind up having to do some data cleaning and reformatting as we go along here, but none of it is too major.

Speaking of cleaning that data set, note that there is a typo or naming convention inconsistency in one of the file names. You will see that the season 5 node file is named

got-s5-node.csvrather than the pattern we would expect ofgot-s5-nodes.csv.

Lastly, I assume some familiarity of the reader with Cypher. If this is not presently a skill you possess, I highly recommend the online Cypher tutorial at the Neo4j website (esp. the section on creating data). In particular, if you are just learning Cypher, I might recommend you check out the docs for [LOAD CSV](https://neo4j.com/docs/cypher-manual/current/clauses/load-csv/), [MERGE](https://neo4j.com/docs/cypher-manual/current/clauses/merge/), [MATCH](https://neo4j.com/docs/cypher-manual/current/clauses/match/), [SET](https://neo4j.com/docs/cypher-manual/current/clauses/set/), and [PERIODIC COMMIT](https://neo4j.com/docs/cypher-manual/current/clauses/load-csv/#load-csv-importing-large-amounts-of-data), which we will be using below.

Introduction to Neo4j 4.x Series – Introduction to Neo4j 4.x Series

Docker container

Prior to firing up the Docker container, we need to do a bit of housekeeping to get our data files in the right places.

First, we want to make sure the CSV files are in the right place. Of course, you can tell Docker to look wherever you want to put them. In my case, I have created a directory ~/graph_data/gameofthrones/ and I put all of my .csv’s there.

Once all of this is in place, run the following command from the CLI:

docker run -p 7474:7474 -p 7687:7687

--volume=$HOME/graph_data/data:/data

--volume=$HOME/graph_data/gameofthrones/data:/var/lib/neo4j/import

--env NEO4JLABS_PLUGINS='["apoc", "graph-data-science"]'

--env apoc.import.file.enabled=true

--env NEO4J_AUTH=neo4j/1234

neo4j:latestSo let’s break this down. We have some port forwarding going on there, which will allow you to connect to the Neo4j Browser UI in your web-browser on localhost:7474. Via the BOLT protocol at port 7687 you would make your connections for accessing the database via programs in Python or other programming languages.

Next, we have a series of folders that are forwarded into the container for read/write between your local machine and the container.

After that, we bring in some environment variables. These are pretty much all optional, but I include them above in case you want to use libraries like APOC or GDS. The first of these tells the container to load the latest versions of APOC and the GDS library as plugins. We also pass a config setting as environment variable that tell Neo4j that is is alright to allow APOC to read files.

And finally set a password (the wonderfully-complicated 1234) for the default neo4j user. (You can choose to not use that bit, but if you do you will have to reset the password for the user every time you fire up the container.)

The final note on this is that the container will automatically change the ownership and permissions of your files and they will only be accessible by the root user. So you might consider keeping a backup of them somewhere that the container doesn’t touch them if you plan on needing to view them or edit them outside of

sudo.

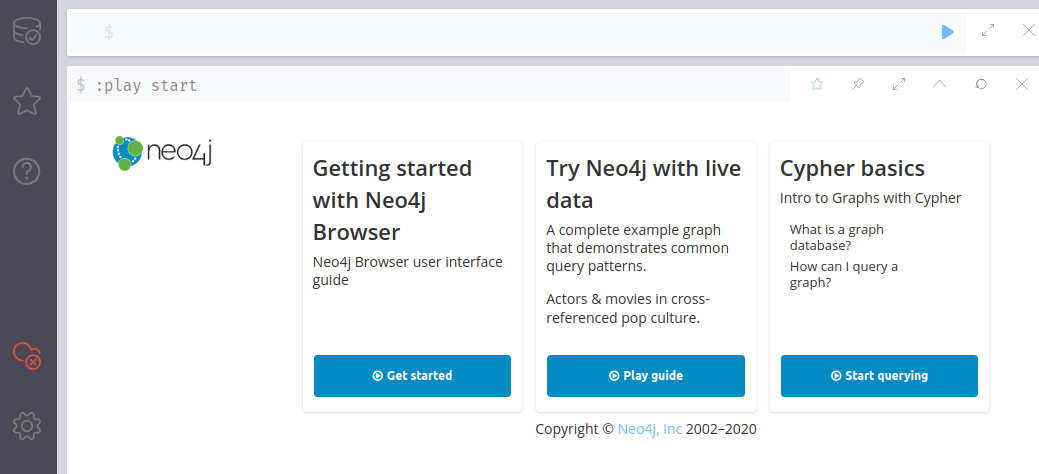

Assuming all goes well, you should be able to point your web browser to localhost:7474 and see a running UI. So now we can go onto the next step!

LOAD CSV: The simple approach

The LOAD CSV command is one of the easiest ways to get your data into the database. It is a Cypher command that can usually run through the Neo4j UI. However, it can also be passed in via the Python connector (or the connector of your language of choice). We will save interfacing with the database via Python for a different blog post.

This approach is great if you have a "small" graph. But what constitutes small? A good rule of thumb is that if you have less than about 100,000 nodes and edges, which the Game of Thrones graph certainly has, then this is a great option. However, it is not the fastest approach (like a bulk loader), so you might want to consider switching over to one of the other methods for loading if your graph is a bit larger.

Looking at our node files, we can see that we have one file per season. The files themselves follow a very simple format of Id, Label where the ID is just the capitalization of the name and the label is the actual character name.

It is generally good practice to create some uniqueness constraints on the nodes to ensure that there are no duplicates. One advantage of doing so is that this will create an index for the given label and property. In addition to speeding up query searches for that data, it will ensure that MERGE statements on nodes (such as the one used in our load statement) are significantly faster. To do so, we use:

CREATE CONSTRAINT UniqueCharacterId ON (c:Character) ASSERT c.id IS UNIQUEPlease note that Labels, Relationshiptypes and property keys are case sensitive in Neo4j. So id is not the same as Id. Common mistake!

Now we can load our node data into the database using:

WITH "file:///got-s1-nodes.csv" AS uriLOAD CSV WITH HEADERS FROM uri AS rowMERGE (c:Character {id:row.Id})

SET c.name = row.LabelYes, you do need to use 3 slashes, but the good news is that if you linked your data to /var/lib/neo4j/import, this is the default directory in the container for reading files and you will not need to specify a lengthy directory structure, which is wrought with peril!

We can see from the above that we are loading in the characters one row at a time and creating a node label called Character with a property called id while creating a new property called name that we set equal to the CSV value of Label (a name not to be confused with the fact that all nodes are given a Character label).

(Note that uri can actually be replaced with the web location of a CSV file as well, so you do not have to be limited by having the actual file on your local computer.)

Notice that we are using the MERGE command here. We could have also used the CREATE command, but there is an important difference in what they do. MERGE looks to see if there is already an instance of the node and then doesn’t create it. It acts as either a MATCH or aCREATE. A new node is only created then if it is not already found within the database.

So in this way, MERGE commands are idempotent.

Next it is time to bring in the edge files. These are formatted in a similar fashion with Source, Target, Weight, Season .

To load these in, we will use the following command in the browser:

WITH "file:///got-s1-edges.csv" AS uri

LOAD CSV WITH HEADERS FROM uri AS row

MATCH (source:Character {id: row.Source})

MATCH (target:Character {id: row.Target})

MERGE (source)-[:SEASON1 {weight: toInteger(row.Weight)}]-(target)Again, the above command reads the files in row-by-row and sets up the edges with a source Character and target Character. In this case, these reference the values of Id in the node files.

I also assign an edge type of :SEASON1 (and change this for subsequent seasons) with weighted edges based on the number of interactions between the source and target character in that season.

I should also briefly mention that this graph is being loaded in as an undirected graph (as is specified in the data repository). You can tell in the last line based on the fact that there is no arrow showing the direction from source to target. If we wanted this to be a directed graph, we would indicated this through the use of an arrow, which would change the format to be (source)-[...]->(target).

Note that Neo4j treats each value from a CSV as a string, so I have converted the weights to integers via toInteger, which will be necessary for some of the calculations with algorithms should you wish to use them. And again, if you want to bring in the other seasons you just rinse and repeat for each edge file.

One note regarding importing larger graphs this way: Neo4j, being transactional, gets memory intensive for huge imports in a single transaction.

You might need to reduce the memory overhead of your importing by periodically having the data written to the database. To do so, you add the following before the query (the :auto prefix works only in Neo4j Browser):

:auto USING PERIODIC COMMIT 500This tells Neo4j to write to the database every 500 lines. It is good practice to do this, particularly if you are memory constrained.

And now we have a populated database for our future graph analytics! It should roughly look like this (although I am not showing every node and have tinkered with the hairball to draw out certain characters):

There are many visualization options out there and the interested reader is encouraged to consult this list for options.

neo4j-admin import

Now that we have seen the loading of data via simple CSV files, we are going to make it slightly more complicated but significantly faster.

Let’s suppose you have a truly "large" graph. In this case, I am talking about graphs with greater than 10 million nodes and edges. The above method is going to take a very long time, largely due to the fact that it is transactional versus an offline load.

You may need to actually use LOAD CVS though if you are updating your database in real time. But even in that case, usually the updates will happen in smaller batches relative to the overall database size.

Data formatting for the import tool

Prior to populated the graph, we have to format our data in a very particular way. We also need to make sure that the data are exceptionally clean. For the node list, we are going to change the format to be (with the first few rows shown):

Id:ID,name,:LABEL

ADDAM_MARBRAND,Addam,Character

AEGON,Aegon,Character

AERYS,Aerys,Character

ALLISER_THORNE,Allister,Character

ARYA,Arya,CharacterThis might look rather simplistic for this data set, but we should not underestimate its power. This is because it allows for the easy importing of multiple node types at one time.

So, for example, maybe you have another node type that is a location in the stories. All you would have to do would be to change the value of :LABEL to achieve that. Also, you can add node properties through adding a column like propertyName and then giving the value as another cell entry in each row.

In a similar fashion, we restructure the edge files to look like:

:START_ID,:END_ID,weight:int,:TYPE

NED,ROBERT,192,SEASON1

DAENERYS,JORAH,154,SEASON1

JON,SAM,121,SEASON1

LITTLEFINGER,NED,107,SEASON1

NED,VARYS,96,SEASON1As you would expect, we need one row for every edge in the graph, even if the edge types change (example: relationships between two characters in both :SEASON1 and :SEASON2).

It is very important that the naming conventions be maintained here! For example, your node files must always have a column labeled :ID and can have an optional column called:LABEL for the node labels. Additionally, any number of node properties can be specified here as well (although none are present in this data set). Your edge files must always have a :START_ID, :END_ID, and optionally :TYPE. The names of these marker suffixes cannot be changed.

(Note that in this case I have created new files and file names to reflect the change in format.)

IMPORTANT NOTE!!! There was a typo in the edge list of season 1 with regard to Vardis Egen (don’t worry…I had to look up who that was too). The node list has his

IdspelledVARDIS_EGEN, but the edge list has a few places, although not all, where it is spelledVARDIS_EGAN. This has been very recently fixed, but if you have an older version of the repository, you might want to pull the update. Otherwise, the easiest fix for the assuming you don’t care about this particular character would be just to either add him as another node within the node list with the incorrect spelling or to fix the spelling in the edge list (which is what I have done). This did not cause a problem with the previous method, but the import tool is much more sensitive to these types of problems.

There are a lot of options that can be used with this format…too many to cover in this post. The interested reader is encouraged to read the documentation on this format, which can be found here.

Using the import tool

In the case of large data ingesting, Neo4j provides a command line tool for ingesting large amounts of data: neo4j-admin import, which can be found inside the container at /var/lib/neo4j/bin/neo4j-admin.

The catch with this tool is that you cannot actually use it to create your graph while the database (at least in Neo4j Community Edition) is running. The database must be shut down first, which poses a bit of a problem for our Docker container. In this case, we are going to start with a fresh container where the database is not yet running.

We will then issue the following command at our local machine’s command line:

docker run

--volume=$HOME/graph_data/data:/data

--volume=$HOME/graph_data/gameofthrones/data:/var/lib/neo4j/import

neo4j:latest bin/neo4j-admin import --nodes import/got-nodes-batch.csv --relationships import/got-edges.batch.csvThis starts up a container that immediately runs the import tool. We specify the directories within the container where the data files live (being sure to use the more complicated CSV-formatted files) relative to /var/lib/neo4j. In our case, our data on our local machine will connect into import/.

Once this command is run, a database is then created that you can access locally at $HOME/graph_data/data. From here we can start up the container using the command at the top of this post. (However, take note that if you ever want to start over with a new database and container, this whole directory must be deleted by root.)

Now that the database is populated and the container is started, we can go into the UI via localhost:7474 and interact with it as per normal.

Concluding thoughts

Once everything is loaded (including all 8 seasons), regardless of your method, you should wind up with a schema that looks like this:

You will find that you have 407 nodes and 4110 relationships.

I have presented two common methods of importing data from CSV files into Neo4j databases. However, like anything with software there are practically an infinite number of ways that you can achieve the same result.

I hope this post provides a clear way to understand the main ones in hopes that this gives you just the beginning of your data science and machine learning journey!

Should you be seeking the next step on how to actually do a few things related to data science on graphs, please check out my post on How to get started with the Graph Data Science Library of Neo4j.

How to get started with the new Graph Data Science Library of Neo4j

Special thanks to Mark Needham for help with some query tuning!

PS: Another note is that it is usually a good idea to tell Neo4j how much memory should be allocated to the database. Many of the Neo4j algorithms that a data scientist wants to run are memory-intensive. The exact configuration will, of course, depend on the machine that you are running on. Database configuration is something that is unique to your needs and beyond the scope of this post. The interested reader can consult the documentation here for fine tuning. For now I am just going with the (albeit limited) memory settings.