AI Alignment and Safety

"How an Algorithm Blocked Kidney Transplants to Black Patients" – Wired

"The Netherlands Is Becoming a Predictive Policing Hot Spot" – VICE

"One Month, 500,000 Face Scans: How China Is Using A.I. to Profile a Minority" – The New York Times

As the use of AI becomes more prevalent in our society, so do these shocking headlines. We should not just pay attention to how AI systems are designed or developed when we see a breaking headline. Rather, Responsible AI should be our first thought when we receive data, define a use case, or start a sprint. After analyzing Google Trends, the team at AI Global identified a disparity between reporting and searching of AI and Responsible AI.

Google Trends on Worldwide Web Searches on Responsible AI, Ethical AI, AI, Bad AI, and Good AI

The only peaks in searches we see on responsible and ethical AI correlate with major news stories such as The New York Times: The End of Privacy as We Know it on February 10, 2020. Even then, these peaks are no where the searches for AI. In order to develop and apply AI responsibly, we must search for and learn the repercussions of poorly designed systems.

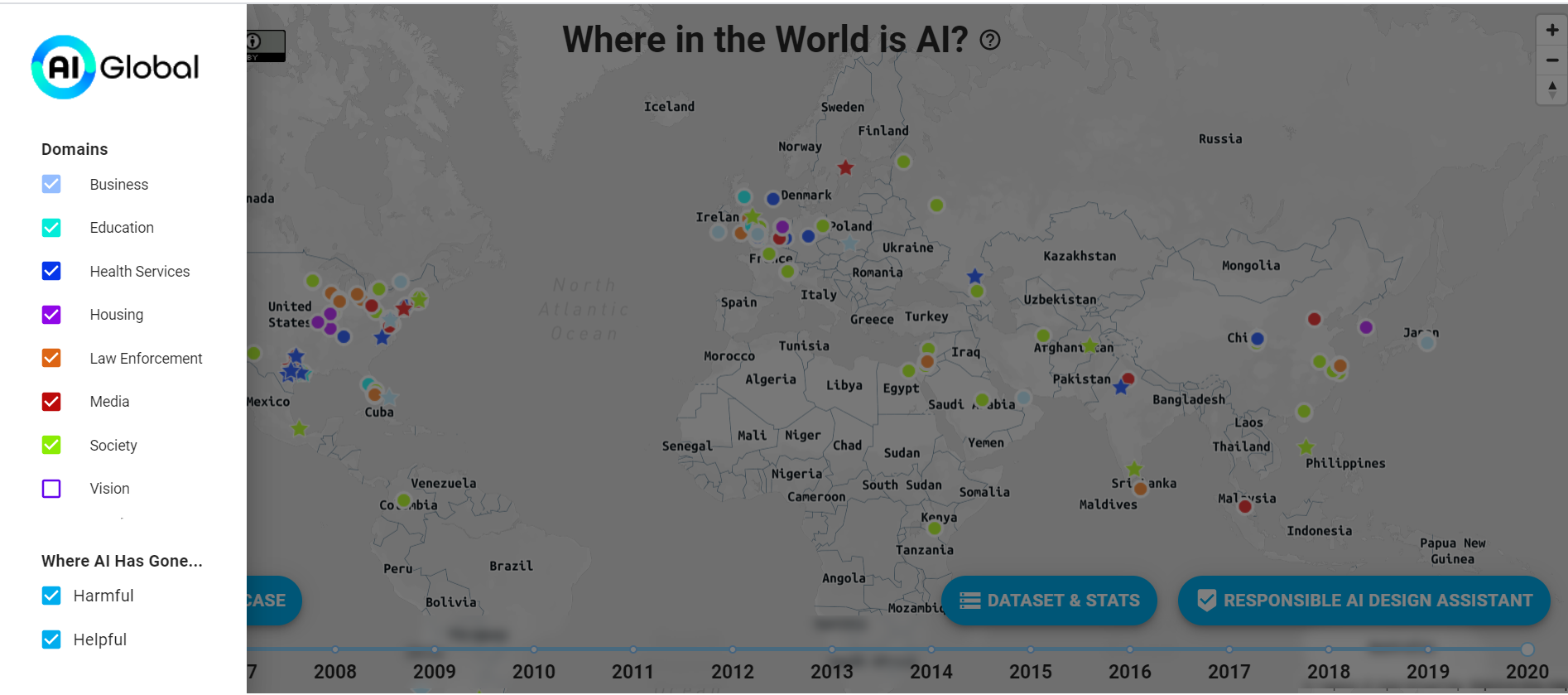

The "Where in the World is AI?" Map is an interactive web visualization tool exploring stories on AI across the world to identify trends and start discussions on more trustworthy and responsible systems. On the map, you can filter by domains from health services to law enforcement, set a year range, and filter by categorization on whether AI has been helpful or harmful.

Where AI Was Harmful

While the map is filled with colorful dots, it’s important not to discount individual data points. In our dataset,

There’s cases of injustice where 40% of A-level results were downgraded because an algorithm was used to assess student’s performance in England.

There’s an examples of systematic racism such as Michael Oliver who was wrongfully charged with larceny because of a facial recognition system in Michigan.

There’s evidence of broken feedback loops such as Tik Tok creating filter bubbles based on race in China.

When not designed and managed in a responsible way, AI systems can be biased, insecure, and in some cases (often inadvertently) violate human rights.

Where AI Has Helped

We are also hopeful about AI. We’ve seen early instances of how AI can augment and advance our daily lives and society. There are also examples where people have corrected or gotten rid of harmful AI systems. We’ve seen AI create art, music, and even detect asymptomatic COVID-19.

Our Process of AI Has Been Harmful/Helpful Labeling

We understand that many of these cases might fall into the grey area of helpful or harmful. Different cultural perspectives, for example, might label cases differently. Our map is currently used by working groups to discuss how these cases impact trust in responsible systems. Furthermore, AI Global is having internal and external discussions on who can decide what is helpful and harmful. We hope that these labels can be a starting point on conversations on responsible AI practices as we navigate this ecosystem.

By highlighting these cases and many more, we hope to shed light on the ramifications of AI across domains and its very real-world consequences. We invite you to explore our map by clicking on a dot/star and learning more about each story.

We are learning & growing, so if you have any feedback or questions, please reach out. A huge thank you to my dream team: Shrivu Shankar, Ameya Deshmukh, Colin Philips, and Lucinda Nguyen, Amaya Mali, and Ashley Casovan at AI Global. Thank you to Doreen Lorenzo for her feedback early on and to Jennifer Aue (Sukis), my first AI Design professor who sparked my interest in this field and encouraged me to write about Ai Ethics on Medium 🙂