Getting Started

NLP has skyrocketed during the last two years thanks to great leaps forward. Many state-of-the-art models based on large deep neural networks and Transformers models have been published, e.g. BERT in 2018 as to the most famous. You may find an interesting list here: [1]. These models are already heavily pre-trained on extensive corpora, ready to be used.

You may want to apply one of these for a particular domain such as law or chemistry; and wonder whether these generalist models will perform well.

Good specific-domain tagged datasets are scarce and extremely expensive to build. For instance, the biomedical BioASQ challenge releases only five hundred new expert-annotated questions per year (currently reaching a total amount of three thousand questions). As a comparison, the generalist SQUAD dataset contains more than one hundred thousand examples.

How can you adapt your own model to a specific domain if little or no annotated data? I investigated ways to deal with this. Directly fine-tuning a BERT-like pre-trained model on a one thousand item dataset can yield to poor results (for instance you may obtain an on-off behavior and a high accuracy variance during training as on fig. 4 of [2]); and, although there are some interesting unsupervised methods to generate questions from plain text [3], I was willing to focus my investigation on the promising TandA method and on ways to adapt or transfer models without synthetic data.

In a first part I explain the TandA method; in a second part I show that reversing it leads to good results while demanding no specific labeled data.

TandA method

Presentation

One year ago, a robust method for fine-training large NLP models was published [2], called TandA for Transfer and Adapt. The idea is to decouple the learning of the task from the learning of the domain. This makes learning more stable; results are better. There are two steps:

- Transfer: fine-tune a pre-trained model on a supervised task with a big general dataset. The task can be sentence classification, extractive question answering or answer-sentence selection, for example;

- Adaptation: then fine-tune it on a small specific dataset. This can be the BioASQ dataset for instance. It must correspond to the previously learned task.

I provide here a way to implement and run this with the Transformers package, since the github repository [4] of the project does not provide tutorial nor complete code. I show how to transfer a Transformer to the answer-sentence selection task. This is a GLUE-like binary classification task: does an answer match a question? I use the dataset of [2] called ASNQ. Then I show how to adapt this Transformer to the biomedical domain, thanks to a dataset issued from the BioASQ challenge, for the purpose of the second part of this post.

Implementation

- Download the ASNQ dataset file at [4] (three-four gigabits), untar it. ASNQ format is one column for questions, one for answers and one for labels. Labels are in {1, 2, 3, 4}. Label 4 is a positive match, the three other a negative match. According to [2], only 3 and 4 matter for learning; the dataset can be lightened.

- Preprocess it:

import pandas as pdasnq = pd.read("train.tsv", sep='t', names=['sentence1','sentence2','label']) # format of run_glue.pyasnqNeg = asnq[asnq['label']==3].sample(frac=0.25)

asnqNeg.loc[:,'label'] = 0

asnqPos = asnq[asnq['label']==4]

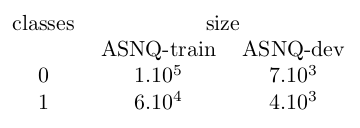

asnqPos.loc[:,'label'] = 1pd.concat([asnqNeg, asnqPos]).to_csv("train.csv", index=False)Do the same for "dev.tsv". I undersample the negative class to obtain the following distribution.

- Download the

run_glue.pyscript for fine-tuning on GLUE-like tasks at [5]. This script needs huggingface’s datasets module (pip install datasets). The line forty-onefrom transformers.trainer_utils import is_main_processcan be commented out if it bugs (erase then the two other occurrences ofis_main_process). - Choose a model among these [6]. You can take

robert-base, or just abert-base. Write the following script and launch it. Learning takes less than a few hours.

python run_glue.py

--model_name_or_path [your model]

--do_train

--do_eval

--train_file [path to train.csv]

--validation_file [path to dev.csv]

--per_gpu_train_batch_size [the max possible size (around 50 for a 10Gb gpu)]

--learning_rate 2e-5

--num_train_epochs 2.0

--output_dir model-asnqI obtain a precision of eighty-eight percents with BERT-base cased. I call this model bert(asnq); this is a BERT transferred onto the ASNQ task.

- Now adaptation. Download data from the BioASQ challenge at [7], unzip it. I use the file BioASQ-7b/train/Full-Abstract/BioASQ-train-factoid-7b-full-annotated.json. It has a SQUAD-like format, question – context – position of a short answer. This is easily convertible to the ASNQ format tokenizing context paragraphs into sentences. I split this file in two to obtain one train set and one test set. A caveat: one question occurs many times, with different context and almost the same short answers; so there will be a small overlap between test and train files.

-

Preprocess it:

You may use a more sophisticated sentence tokenizer, such as nltk’s PunktSentenceTokenizer. I obtain this distribution.

- I use again the

run_glue.pyscript. I apply it to the previous model bert(asnq), thanks to the following script.

python run_glue.py

--model_name_or_path [path to model-asnq]

--do_train

--do_eval

--train_file [path to bioasqTrain.csv]

--validation_file [path to bioasqTest.csv]

--per_gpu_train_batch_size [the max possible size]

--learning_rate [to be choosen ; typically from 1e-6 to 3e-5]

--num_train_epochs [two or a few more for low learning rates]

--output_dir model-asnq-bioasqLearning takes less than twenty minutes; you can optimize hyperparameters. I obtain a precision of eighty-one percents with my previous bert-base-asnq, lr 1e-5. I call this model bert(asnq–bioAsq); this is a BERT transferred onto the ASNQ task and adapted for the BioASQ data.

- This is the classical TandA method. Let’s reverse it.

Adaptation and Transfer, no specific labeled data

We have much unlabeled domain-specific data; this would be interesting to use it before the model becomes task-specialized.

I propose to:

- adapt the pre-training of a model thanks to domain-specific plain text;

- transfer it to a particular task thanks to a general labeled dataset;

- possibly adapt it to a specific dataset.

The adaptation of the first step is meant as an adaptation to the vocabulary of the domain; while the adaptation of the third step is an adaptation to the vocabulary and the statistical patterns (i.e. possibly bias) of the particular dataset.

Step one is not simple. Doing the whole pre-training of a randomly initialized Transformer is expensive and demands gigabytes of text. This is better to just refine the pre-training, adding a few more steps on your corpora. [8] explains this in detail as to finance, for instance.

Implementation

I prefer to use a BioBert-base model [9]. This is a bert-base-cased pre-trained on both general and biomedical data. It works just as a classical BERT; and since a Transformer implementation is provided, run_glue.py still works. BioBert is a model adapted for the biomedical domain that BioASQ uses.

I perform the last two steps of the previous part with the commands:

python run_glue.py

--model_name_or_path dmis-lab/biobert-base-cased-v1.1

--do_train

--do_eval

--train_file [path to train.csv]

--validation_file [path to dev.csv]

--per_gpu_train_batch_size [...]

--learning_rate 2e-5

--num_train_epochs 2.0

--output_dir biobert-asnqand

python run_glue.py

--model_name_or_path biobert-asnq

--do_train

--do_eval

--train_file [path to bioasqTrain]

--validation_file [path to bioasqTrain]

--per_gpu_train_batch_size [...]

--learning_rate [...]

--num_train_epochs [...]

--output_dir biobert-asnq-bioasqI call the trained models biobert(asnq) and biobert(asnq–bioAsq).

Comparison

Here is the accuracy obtained with bert(asnq) vs biobert(asnq).

And here is the accuracy obtained with bert(asnq–bioAsq) vs biobert(asnq–bioAsq).

The Biobert outperforms the BERT on the biomedical domain, before having seen any BioASQ data, as expected; their precisions differ by four points (evaluation on BioAsq-train, table 1) to five and half (on BioAsq-test). BERT still performs well. A caveat: the quality of the BioASQ set is questionable, there is variance on scores between the train and test sets of table 1.

The main conclusion: you can obtain good results without using any specific labeled dataset. Taking the revert way seems fair. biobert(asnq) (red square) has close accuracy to bert(asnq–bioAsq) (blue square) on the BioAsq-test set.

Reverting the TandA method offers a second advantage. biobert(asnq) should be able to deal with more general data, i.e. not distributed like BioASQ; while bert(asnq–bioAsq) may be too specialized and not able to generalize. This way, the adaptation is done on text and not on a dataset. A dataset may have spurious correlations; plain text has less bias. This way your model learn the entire domain. This is important if the data you may label is not representative enough. This is not important for challenges such as BioASQ, where your model will run against a similar dataset, but this case is artificial. The actual performance of bert(asnq–bioAsq) (the TandA method) may be exaggerated.

Going Further

Ultimately this would be fine to cease using a labeled dataset for the transfer of the model onto the task. The transfer dataset (ASNQ here) may have spurious correlations too and it must be built for every new task. Learning both, adaptation, as I propose, and transfer from pure text is a promising method; see [10].

If you want to test this code on other domains, you may find many SciBert projects for science on github, or financial data and a FinBert at [8] or [11].

[1] hxxps://huggingface.co/transformers/model_summary.html [2] hxxps://arxiv.org/abs/1911.04118 [3] hxxps://arxiv.org/abs/2004.14503 [4] hxxps://github.com/alexa/wqa_tanda [5] hxxps://github.com/huggingface/transformers/blob/master/examples/text-classification/run_glue.py [6] hxxps://huggingface.co/transformers/pretrained_models.html [7] hxxps://github.com/dmis-lab/bioasq-biobert#Datasets [8] hxxps://www.researchgate.net/publication/334974348_FinBERT_pre-trained_model_on_SEC_filings_for_financial_natural_language_tasks [9] hxxps://github.com/dmis-lab/biobert [10] hxxps://arxiv.org/abs/2005.14165 [11] hxxps://arxiv.org/abs/1908.10063 with hxxps://github.com/ProsusAI/finBERT