Hands-on Tutorials

Ever wondered where the chi-square test for comparing proportions comes from?

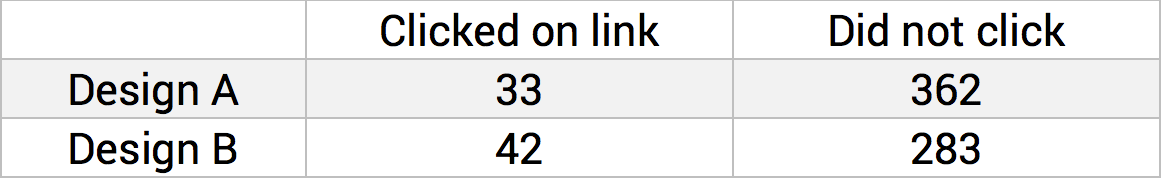

A/B testing is a tool that allows to check whether a certain causal relationship holds. For example, a data scientist working for an e-commerce platform might want to increase the revenue by improving the design of the website. If we assume that the revenue is a function of the proportion of users that click on a certain link, then the data scientist would like to know if the redesign of the webpage increases this proportion. In practice, she could create two versions of the website "Design A" and "Design B", randomly direct users to one of the two versions and record the results. She would then have a table like this:

The main question is then: given these results, can we infer a causal relationship between the change of the design and the click rate?

A/B testing is now very well-known and a good practical introduction is available here. However, it seems that the mathematical foundations behind the theory are mostly hidden away in large books. The goal of this article is thus to give an informal introduction to these mathematical foundations which include maximum likelihood estimation, hypothesis testing and asymptotic theory. In fact, the restriction to such A/B experiments will allow use to go quite far using only the central limit theorem! How far? We hope that after reading this article, the reader will have an intuition of where the test of equal proportions (or equivalently the Pearson chi-square test) comes from. More generally, we hope to give a feeling of how statistical tests are built and give some intuition about the related concepts.

Mathematical prerequisites

In this first section, we expose some mathematical facts and notations which will be used throughout this article. First assumption: to model our phenomena we will use integer-valued (discrete) random variables, since the variables that are of interest to us such as the number of page views are inherently integer-valued. (This doesn’t mean that continuous distributions won’t show up later and in fact, we assume also that the reader is familiar with the normal and chi-square distributions.) We also assume that the reader is familiar with the content of the (classical Lindeberg-Lévy) central limit theorem as stated for example here.

On to the notations. The letter P will designate some probability measure and E the expectation with respect to P. When P is the law of a random variable which depends on some parameter β, we will sometimes make this explicit by writing

for the law and the expectation with respect to this law. Next, given a random variable X we will write

for, respectively, the mean, variance and standard deviation of X.

The first integer-valued random variable one studies is the Bernoulli trial. This random variable represents the outcome of an experiment with only two possibilities, such as the flip of a coin. We encode the possible outcomes as "1" (success) and "0" (failure), and we assume that the experiment has a probability p ∈ (0, 1) of yielding "1". Then, if we name this random variable X, we can write the probability mass function as

We see that this yields exactly what we want, since by replacing k = 1 in the equation we get exactly that the probability of success is p. The Bernoulli trial is not only useful if you are going to gamble, it can be used to represent any experiment where there are two categorical outcomes: whether a treatment was successful or not, whether a user clicked on a web page or not and so on.

The mean and variance of X are easily computed, from the definition of the expectation and variance for discrete random variables, as

and

The obvious generalization of the Bernoulli trial is to repeat it. Instead of flipping the coin only once, you flip it n times. This repetition of the Bernoulli trial can be represented by a sequence of random variables which we denote

and which indicate if the i-th trial was a success or failure. However, since it is not very convenient to to work with a sequence of random variables, we will instead consider the random variable Y defined as the total number of successes in these n trials

To simplify even further, we can assume that the Bernoulli trials are independent with the same probability of success and we can then obtain the distribution of Y, which is called the binomial distribution and denoted B(n, p). This distribution is thus defined by the two parameters n (the number of trials) and p (the probability of a single Bernoulli trial) and, if we call the associated random variable Y, is given by

This last formula comes from the fact that Y = k means exactly that there are k successes among the n Bernoulli trials (and the binomial coefficient indicates the number of ways this can happen). Note that we don’t make the dependence on n explicit in the formula since it is in general fixed.

From the representation of the binomial as the sum and the independence and the formulas for the mean and variance of the Bernoulli trials, we can immediately obtain the mean and variance of Y

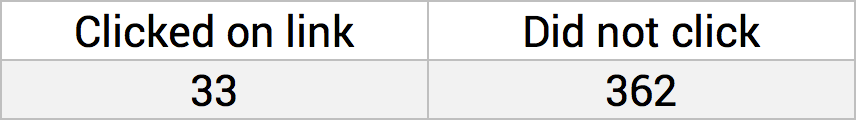

Before turning to an example like the one in Fig0, we will first consider the simpler case of the single binomial count. The simple binomial count is an experiment where we observe n independent Bernoulli trials, where the probability p of success remains constant, or equivalently, we observe the realization of a single binomial random variable. In practice, we can imagine counting the number of users that are going to click on a link on some webpage. Then, n represents the total number of users and Y (which we assume to have a binomial B(n, p) distribution) represent the number of users that are going to click on the link. Of course, in practice, we do not observe the random variable *Y*, but instead a number k (the number of users that actually clicked on the link) which we call th_e realizati_on of the random variable **Y**. We can summarize the realization of this experiment in the following table:

One of the main goals in statistics is to obtain the parameters of some target distribution based on the observation of the realization of the associated random variable. So, for example, in Fig1, we obtained a realization k of Y and from this value, we would like to obtain a estimate of the unknown parameter p. This can be done using maximum likelihood estimation.

Maximum likelihood estimation

First, we need to mention that the general principles presented in this part are not limited to the study of binomial counts or A/B tests, but are valid more generally. However, to be more clear we will adapt the computations to our particular cases. Now on to the theory.

The principle of maximum likelihood estimation gives a method to estimate the unknown parameter p from a realization of the associated random variable. This principle tells us to choose the value

that is the most likely given the observations. In our case, if we observed k users that followed the link, this mean that we choose the value of p that maximize the probability of this observation, i.e., that maximizes the likelihood function

(Note: if we had multiple observations of a random variable, the likelihood function would be more complicated. In fact, with multiple observations, the likelihood function can be defined as the product of the probabilities for all observations.)

To simplify this maximization problem, statisticians often choose to work with the log-likelihood function instead, which is simply

This works because the logarithm is an increasing function and so the maximums of L and l are attained at the same value of p. With this simplification, the maximization becomes simple, we take the derivative with respect to p and set this expression equal to 0 to obtain

Then, we solve for p to obtain

which is simply the proportion of observed successes. To check that this value is truly a maximum of the function l, we take the second derivative with respect to p, which yields

and note that this is negative for any p ∈ (0, 1) since n ≥ k.

So the likelihood function allows us to obtain an estimate of some parameter of our distribution, but this is not its only feature. In fact, there are other methods, such as the method of moments, which allows us to do the same. What makes the maximum likelihood special are its asymptotic properties, i.e., what happens to it when the number n becomes big. For a more detailed introduction to the general method, check out this article.

Asymptotic properties of the maximum likelihood estimator

Before we dig in, we first need to go over a subtle point. We mentioned before that we could assume that n was fixed, this is true when we conduct an specific experiment on users. Similarly, when we have made this experiment, the number of observed successes k is also fixed. In fact, for the experiment conducted in Fig1, we have k = 33 and n = 33 + 362 = 395. However, if we think from a mathematical point of view, we do not think about one experiment in particular, but about all experiments that could be made. What does this change? First, n is not fixed anymore, since the size of the experiment can vary. Second, and more importantly, the maximum likelihood estimator of an experiment becomes a random variable, since we do not know what the state of the world will be when we conduct the experiment.

To try to make this a bit clearer, recall that we obtained

as an estimate of p in the binomial count experiment (where we have now also added a subscript n to make the dependence visible). Maybe, if we had run the experiment an other day, only l persons instead of k would have clicked on the link and then our estimate would have been

In fact, to take into account all possible cases of the binomial count, we define the maximum likelihood estimator of p, as the random variable

where Y has a binomial B(n, p) distribution. So, we replace the observed value k by the random variable Y that gave rise to this observation to be able to take into account all possible outcomes of the experiment. This is the subtle but important point: we go from an estimate which is simply a value we compute from the realization of the experiment, to an estimator which is a random variable whose realization represents the possible outcomes of the experiment. This allows us to think more generally about our experiment.

The first interesting property of the maximum likelihood estimator follows directly from the formula from the mean of a binomial random variable

This means that our estimator will on average give the value p, that we wanted to estimate (which is good news). An other property is the consistency of the estimator, which shows that, when the n becomes large, we can replace the estimator with p. In fact, we can even go further than that and see how our estimator will be distributed around the value p. Applying the central limit theorem to sum of Bernoulli trials we defined above, we find that the quantity

can be approximated by a standard normal random variable, when n becomes large and where we defined

So, the estimator, as n grows large, is distributed as a normal random variable around the mean p, and with an explicit variance.

The fact that the maximum likelihood estimator can be approximated in such a way is true in a much more general setting than that of the binomial random variable. However, this relies on a even stronger property which is called the asymptotic normality of the maximum likelihood estimator, which we (fortunately?) didn’t have to use here. To go further in this direction, I recommend "Mathematical statistics" by Keith Knight.

Confidence intervals and hypothesis testing

The main use of the approximation property derived in the last section is the construction of confidence intervals and statistical tests. This is what we are going to do in this section.

The first way we can use the asymptotic normality derived in the last section is by computing a confidence interval for the maximum likelihood estimator of p. A confidence interval for the parameter p is, informally, an (random) interval that has a high probability of containing the (true and unknown) value p. The advantage is that confidence intervals allow us to go further than the simple maximum likelihood estimator. Instead of obtaining only a single value using the realization of the experiment, we obtain an interval which has a high chance of containing the desired parameter value.

To build this interval, we use the asymptotic normality proven in the last section to write

where N is a standard random variable. We see that the second probability is exactly what we want: a random interval containing p. So we only have to make this probability as big as possible. For example, if we take a = 1.96, (the 95% quantile of the normal distribution), we have

and thus, when n is large enough, we have approximately a 95% chance that the true value of p lies in the interval

Or to formulate it differently: in 95% of realizations of the experiments, this interval will contain the true value of p.

In practice, we observe only one realization of the experiment. So, for Fig1 for example, we have k = 33 and n = 362 + 33 = 395, thus (rounding down)

This is simple to do. However, there is a small issue to compute the variance that appears in the normal approximation: it depends on the unknown value p… But, statisticians have a trick called Slutsky’s lemma which shows that we can, when n is large, replace the unknown value p by its estimate k/n for the computation of confidence intervals. So, for the example in Fig1, we obtain

And finally, the 95% confidence interval for the parameter p is given by [0.0835 – 0.0272, 0.0835 + 0.0272] = [0.0563, 0.1107]. (Note: if you compare this result with the confidence interval of R’s prop.test function, you will get a slight discrepancy.)

Another way to go further than maximum likelihood estimation is hypothesis testing. Hypothesis testing is a method in statistics that allows us to check whether a (unknown) parameter is in some range, using the realization of the experiment. To come back to the example of Fig1, we could imagine that the our base assumption was that p = 0.05, i.e., the probability of clicking on the link is 5%. In statistics, the assumption that is made before the experiment is called the null hypothesis ("H-zero") and the alternative is called the alternative hypothesis (or "H-one"). This is generally denoted symbolically by

So, the question of hypothesis testing is to check if our null hypothesis can be rejected given the realization of the experiment. Again, this is not a yes/no question, but is always stated using probabilities, e.g., "we have a 95% chance of rejecting the null hypothesis with this realization".

Hypothesis testing relies on a quantity called a statistic which is simply a function T which depends on the random variable modeling our experiment. In the simple binomial count experiment, a statistic would be any function depending on Y which we denote T(Y). One of the most used statistic is the Wald statistic defined by

where the dependence on Y is implicit (recall that the estimator is equal to Y/n). Once we have such a statistic, we would like to know its distribution when the null hypothesis is true (statisticians say "the distribution under the null hypothesis"), so we could evaluate this probability using our realization and see if the null hypothesis is likely or not. In general, it is very hard to get the true distribution under the null of some statistic, but good tests are built so that we known the distribution at least when n becomes large. In fact, most test are built using this principle.

This is where the asymptotic normality of the maximum likelihood estimator comes in once again! Notice that we have

and we know that, under the null, the quantity inside the square can be approximated by a standard normal random variable when n becomes large. Thus, T(Y) can be approximated by the distribution of the square of a standard normal distribution which is simply the chi-squared distribution (with one degree of freedom)! Such tests give rise to the (big) family of chi-square tests.

To see how we can apply this, we use the values of Fig1 and the null hypothesis that p = 0.05. Using the Slutsky trick from the computation of the confidence interval, we can compute the value of the realization of the Wald statistic as

And, if Z has a chi-square distribution with one degree of freedom, we have

This means that we have only a 1.6% chance of observing this value if the true parameter was p = 0.05, so we can reject the null hypothesis with 100% – 1.6% = 98.4% "certainty". (The statement can be made a bit more "statistical" using the notion of p-value.)

So we have arrived a the end of the hardest part, but we still haven’t looked at A/B testing. Until now, we focused only on a single binomial count (which we could call " A-testing"). The good news is that A/B testing is simply going from one binomial count to two.

Comparing two binomial counts (A/B testing)

Finally we can come back to the problem that we considered in the introduction. Recall: we have a table like Fig0 and we would like to know if the new version of the website leads to a higher click rate. A way to model this is to say that the count of the number of clicks for website A and the number of clicks for website B are both modeled as binomial distributions, but with different parameters

If we assign the users to the A or B page randomly, we can in addition assume that these binomial random variables are independent. As before, we define the maximum likelihood estimators for those two binomials as

Using this formalism, we are now ready to frame the question in the introduction as a statistical hypothesis test:

In other terms, we want to test the null hypothesis that the design of the website has no effect on the proportion of clicks. In statistics, this is called a test of equal proportions and it is based on the following test statistic

where

What we are going to show now is that, under the null,

Once we have done that, we have a statistical test! Indeed, given this result, we can do a similar computation as the one in last section, for the simpler binomial count test.

The first important remark is the following:

i.e., the sum of our binomial random variables is itself a binomial random variable with known parameters. This is only true if the two binomial random variables are independent (which we assumed). (This can be proven by recalling that the binomial is simply the distribution of a sum of independent Bernoulli trials.) The important point of this remark is that the quantity

is thus another maximum likelihood estimator for p, under the null. And, by using the consistency of the maximum likelihood estimator, we can then replace this quantity by p, when

Next, by simple algebra and using the I function defined above, we obtain

or equivalently

Now, under the null,

and the fact that the multiplying fraction goes to 1. Finally, under the null,

since

by the results we proved above. Thus, we have obtained what we wanted to prove! Of course, this proof is not completely formal, but it gives the main structure and intuition. (In fact, it can be made formal quite simply using characteristic functions, for those who master the different types of convergence for random variables.)

As we already mentioned, this can now be used to conduct the statistical test using a similar method as in the test we did before. In fact, I will leave this to the reader, since there is no royal road to geometry!

Exercise: compute the value of the z statistic and the value and the approximate probability of obtaining the values of Fig0 and conclude whether the experiment was successful or not.

Solution: you should find

This finishes this introductory article and to go (much) further, I recommend "Categorical Data Analysis" by Alan Agresti. I hope that this has made the concepts of maximum likelihood estimation, statistical testing and A/B experiments a bit clearer and that it was useful in your Data Science journey!

Bonus fact: The z-square test we derived in this article can be shown to be equivalent to the well-known Pearson chi-square test!