How well do these two transformer models understand documents? In this second part I will show you how to train them and compare their results for the task of key index extraction.

Finetuning Donut

So let’s pick up from part 1, w[here](https://github.com/Toon-nooT/notebooks/blob/main/Donut_vs_pix2struct_2_Ghega_donut.ipynb) I explain how to prepare the custom data. I zipped the two folders of the dataset and uploaded them into a new huggingface dataset here. The colab notebook I used can be found here. It will download the dataset, set up the environment, load the Donut model and train it.

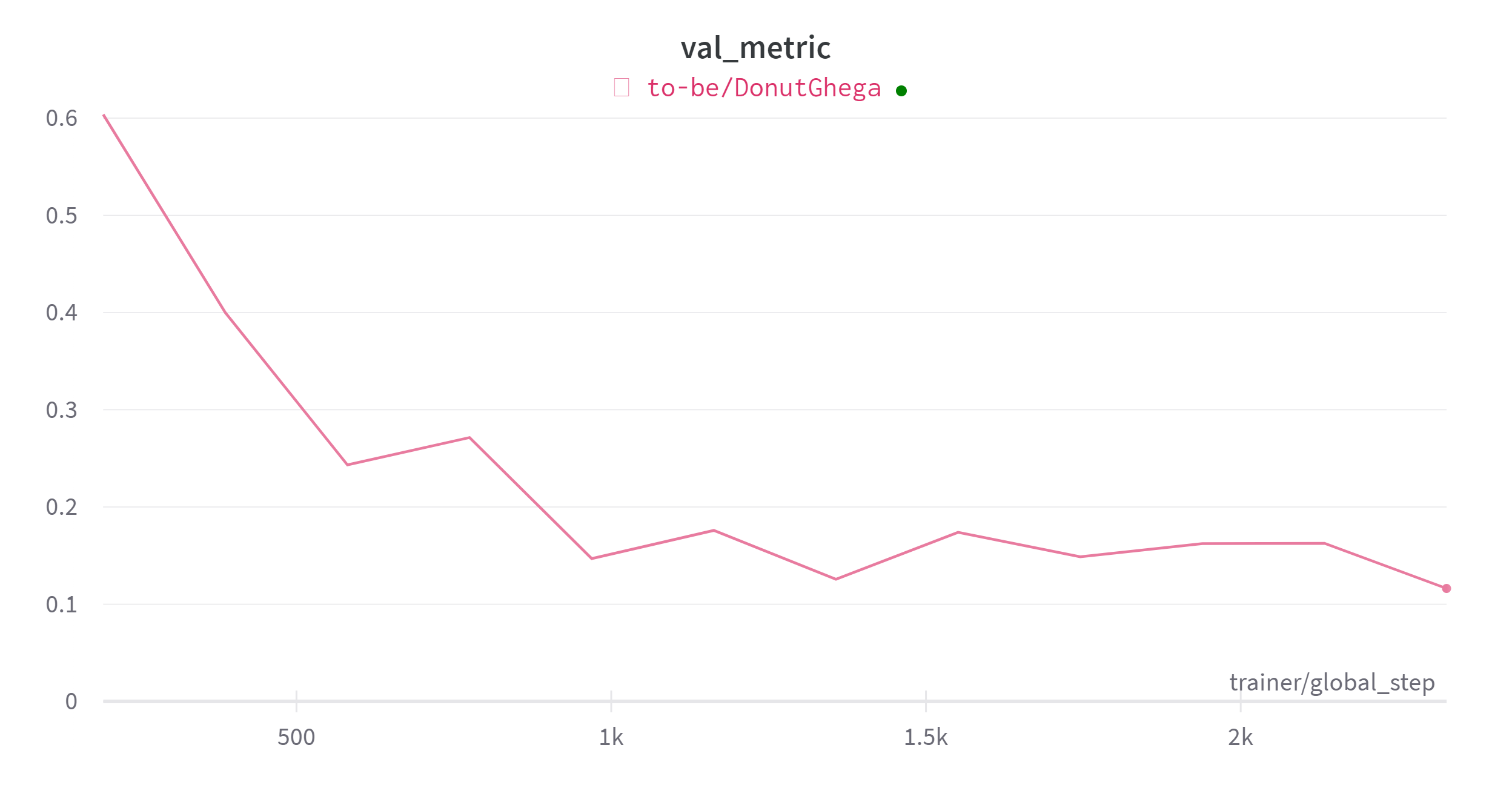

After finetuning for 75 minutes I stopped it when the validation metric (which is the edit distance) reached 0.116:

On field level I get these results for the validation set:

When we look at Doctype, we see Donut always correctly identifies the docs as either a patent or a datasheet. So we can say that classification reaches a 100% accuracy. Also note that even though we have a class datasheet it doesn’t need this exact word to be on the document to be classifying it as such. It does not matter to Donut as it was finetuned to recognize it like that.

Other fields score quite OK as well, but it’s hard to say with this graph alone what goes on under the hood. I’d like to see where the model goes right and wrong in specific cases. So I created a routine in my notebook to generate an HTML-formatted report table. For every document in my validation set I have a row entry like this:

On the left is the recognized (inferred) data together with its ground truth. On the right side is the image. I also used color codes to have a quick overview:

So ideally, everything should be highlighted green. If you want to see the full report for the validation set, you can see it here, or download this zip file locally.

With this information we can spot usual Ocr errors like Dczcmbci instead of December, or GL420 instead of GL420 (0’s and O’s are hard to distinguish), that lead to false positives.

Let’s focus now on the worst performing field: Voltage. Here are some samples of the inferred data, the ground truth and the actual relevant document snippet.

The problem here is that the ground truth is mostly wrong. There is no standard of including the unit (Volt or V) or not. Sometimes irrelevant text is taken along, sometimes just a (wrong!) number. I can see now why Donut had a hard time with this.

Above are some samples where Donut actually returns the best answer while the ground truth is incomplete or wrong.

Above is another good example of bad training data confusing Donut. The ‘I’ letter in the ground truth is an artifact of the OCR reading the vertical line in front of the information. Sometimes it’s there, other times not. If you preprocess your data to be consequent in this regard, Donut will learn this and adhere to this structure.

Finetuning Pix2Struct

Donut’s results are holding up, will Pix2Struct’s as well? The colab notebook I used for the training can be found here.

After training for 75 minutes I got an edit distance score of 0.197 versus 0.116 for Donut. It is definitely slower at converging.

Another observation is that so far every value that is returned starts with a space. This could be an error in the ImageCaptioningDataset class, but I did not investigate further into the root cause. I do remove this space when generating the validation results though.

Prediction: <s_DocType> datasheet</s_DocType></s_DocType> TSZU52C2 – TSZUZUZC39<s_DocType>

Answer: <s_DocType>datasheet</s_DocType><s_Model>Tszuszcz</s_Model><s_Voltage>O9</s_Voltage>I stopped the finetuning process after 2 hours because the validation metric went up again:

But what does that mean on field level for the validation set?

That looks a lot worse than the results of Donut! If you want to see the full HTML report, you can see it here, or download this zip file locally.

Only the classification between a datasheet and a patent seems to be quite OK (but not as good as Donut). The other fields are just plain bad. Can we deduct what’s going on?

For the patent docs, I see lots of orange lines which mean that Pix2Struct did not return those fields at all.

And even for patents where it returns fields, they are completely made up. Whereas Donut’s errors stem from picking it from another region on the document or having minor OCR mistakes, Pix2Struct is hallucinating here.

Disappointed by Pix2Struct’s performance, I tried several new training runs in hopes of better results:

I tried lowering gradually the accumulate_grad_batches from 8 to 1. But then the learn rate is too high and overshoots. Lowering that to 1e-5 makes the model not converge. Other combinations lead to the model collapsing. Even if with some specific hyperparameters the validation metric looked quite OK, it was giving a lot of incorrect or unparseable lines, like:

<s_DocType> datasheet</s_DocType><s_Model> CMPZSM</s_Model><s_StorageTemperature> -0.9</s_Voltage><s_StorageTemperature> -051c 150</s_StorageTemperature>None of these attempts gave me substantial better results, so I left it at that.

Until I saw that a cross attention bug was fixed in the huggingface implementation. So i decided to give it a last try. Trained for 2 and a half hour and stopped at a validation metric of 0.1416 .

This looks definitely better than all previous runs. Looking at the HTML report, it now seems to hallucinate less. Overall it’s still performing worse than Donut.

As for reasons why, I have two theories. Firstly, Pix2Struct was mainly trained on HTML web page images (predicting what is behind masked image parts) and has trouble switching to another domain, namely raw text. Secondly, the dataset used was challenging. It contains many OCR errors and non-conformities (such as including units, length, minus signs). In my other experiments it really came to light that the quality and conformity of the dataset is more important than the quantity. In this dataset the data quality is really subpar. Maybe that is why I could not replicate the claim in the paper that Pix2Struct exceeds Donuts performance.

Inference speed

How do the two models compare in terms of speed? All trainings were done on the same T4 architecture, so the times can be readily compared. We already saw that Pix2Struct takes much longer to converge. But what about inference times? We can compare the time it took for inferring the validation set:

Donut takes on average 1.3 seconds per document to extract, while Pix2Struct more than double.

Takeaways

- The clear winner for me is Donut. In terms of ease-of-use, performance, training stability and speed.

- Pix2Struct is challenging to train because it is very sensitive to training hyperparameters. It converges slower and doesn’t reach the results of Donut in this dataset. It may proof worthwhile to revisit Pix2Struct with a high(er) quality dataset.

- Because the Ghega dataset contains too many inconsistencies, I will refrain from using it in further experiments.

Are there any alternative models?

- Dessurt, which seems to share a similar architecture with Donut should perform in the same league.

- DocParser, which the paper claims to perform even a little better. Unfortunately there is no plan to release this model in the future.

- mPLUG-DocOwl will soon be released which is yet another OCR-Free LLM for document understanding with promising benchmarks.

You may also like:

References:

Pix2Struct: Screenshot Parsing as Pretraining for Visual Language Understanding