What happens if multiple data pipelines need to interact with the same API endpoint? Would you really have to declare this endpoint in every pipeline? In case this endpoint changes in the near future, you will have to update its value in every single file.

Airflow variables are simple yet valuable constructs, used to prevent redundant declarations across multiple DAGs. They are simply objects consisting of a key and a JSON serializable value, stored in Airflow’s metadata database.

And what if your code uses tokens or other type of secrets? Hardcoding them in plain-text doesn’t seem to be a secure approach. Beyond reducing repetition, Airflow variables also aid in managing sensitive information. With six different ways to define variables in Airflow, selecting the appropriate method is crucial for ensuring security and portability.

An often overlooked aspect is the impact that variable retrieval has on Airflow performance. It can potentially strain the metadata database with requests, every time the Scheduler parses the DAG files (defaults to thirty seconds).

It’s fairly easy to fall into this trap, unless you understand how the Scheduler parses DAGs and how Variables are retrieved from the database.

Defining Airflow Variables

Before getting into the discussion of how Variables are fetched from the metastore and what best practices to apply in order to optimise DAGs , it’s important to get the basics right. For now, let’s just focus on how we can actually declare variables in Airflow.

As mentioned already, there are several different ways to declare variables in Airflow. Some of them turn out to be more secure and portable than others, so let’s examine all and try to understand their pros and cons.

1. Creating a variable from the User Interface

In this first approach, we are going to create a variable through the User Interface. From the top menu select Admin → Variables → +

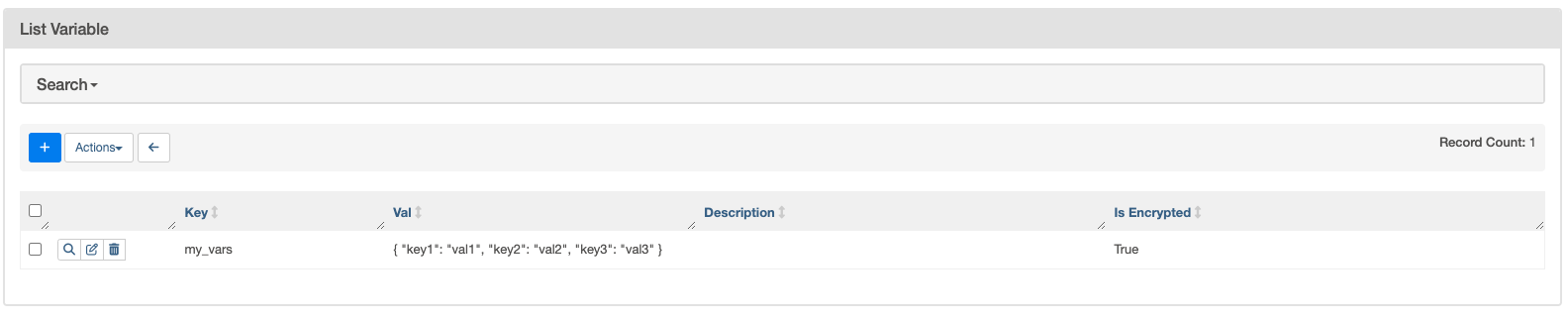

Once you enter the key and value, click Save to create it. The variable should now be visible in the Variables’ List. By default, variables created on the UI are automatically stored on the metadata database.

Notice how the value of the Variable is shown in plain text. If you are looking into storing sensitive information in one of your Airflow Variables, then the UI approach may not be the most suitable.

Furthermore, this option lacks portability. If you want to re-create an environment, you will first have to manually export them from the current environment and finally import them back to the newly created one.

2. Creating a variable by exporting an environment variable

The second option we have, is to export environment variables using the AIRFLOW_VAR_<VARIABLE_NAME> notation.

The following commands can be used to create two variables, namely foo and bar.

export AIRFLOW_VAR_FOO=my_value

export AIRFLOW_VAR_BAR='{"newsletter":"Data Pipeline"}'One advantage of this approach is that variables created via environment variables are not visible on the UI (yet, you can of course reference them in your code) and thus any sensitive information will not be visible either.

Unlike variables created via the UI, this approach won’t persist them on the metadata database. This means that environment variables are faster to retrieve since no database connection should be established.

But still, managing environment variables can be challenging too. How do you secure the values if environment variables are stored in a file used in automation script responsible for Airflow deployment?

3. Creating a variable via Airflow CLI

Variables can also be created with the use of Airflow CLI. You will first need to connect to the Airflow Scheduler worker. For example, if you are running Airflow via Docker, you will first need to find the scheduler’s container id and run

docker exec -it <airflow-scheduler-container-id> /bin/bashYou can now create a new Airflow Variable using airflow variables command:

airflow variables set

my_cli_var

my_value

--description 'This variable was created via CLI'If you would like to assign multiple values in a particular variable, you should consider using JSON format:

airflow variables set

my_cli_json_var

'{"key": "value", "another_key": "another_value"}'

--description 'This variable was created via CLI'You will also have the option to serialise the JSON variable. This can be done by providing the -j or --json flag.

airflow variables set

--json

my_cli_serialised_json_var

'{"key": "value", "another_key": "another_value"}'

--description 'This variable was created via CLI'Now if we head back to the Variable list on the UI, we can now see all three variables that were created in previous steps.

Variables created via the CLI are visible on the User Interface, which means sensitive information can also be exposed and are stored on the metadata database.

In my opinion, this approach is handful in development environments where you may want to quickly create and test a particular variable or some functionality referencing it. For production deployments, you will have to write an automation script to create (or update) these variables, which means this information must be stored somewhere, say in a file, which makes it challenging to handle given that some variables may contain sensitive information.

4. Creating a variable using the REST API

This fourth approach involves calling the REST API in order to get some variables created. This is similar to the Airflow CLI approach and it also offers the same advantages and disadvantages.

curl -X POST ${AIRFLOW_URL}/api/v1/variables

-H "Content-Type: application/json"

--user "${AIRFLOW_USERNAME}:${AIRFLOW_PASSWORD}"

-d '{"key": "json_var", "value": "{"key1":"val1"}"}'5. Creating variables programmatically

Programmatic creation of variables is also feasible and straightforward.

Python">def create_vars():

from airflow.models import Variable

Variable.set(key='my_var', value='my_val')

Variable.set(

key='my_json_var',

value={'my_key': 23, 'another_key': 'another_val'},

serialize_json=True,

)

...

PythonOperator(

task_id='create_variables',

python_callable=create_vars,

)Needless to say that this is a bad practice and should be avoided in production deployments. Variables -and especially those containing sensitive information- should not be declared in the DAG files given that the code is version controlled and also visible on the Code Tab of the UI.

6. Creating variables in a Secret Store/Backend ❤

In addition to variable retrieval from environment variables or the metastore database, you can also enable alternative secrets backend to retrieve Airflow variables.

Airflow has the capability of reading connections, variables and configuration from Secret Backends rather than from its own Database. While storing such information in Airflow’s database is possible, many of the enterprise customers already have some secret managers storing secrets, and Airflow can tap into those via providers that implement secrets backends for services Airflow integrates with.

Currently, the Apache Airflow Community provided implementations of secret backends include:

- Amazon (Secrets Manager & Systems Manager Parameter Store)

- Google (Cloud Secret Manager)

- Microsoft (Azure Key Vault)

- HashiCorp (Vault)

In fact, this is the best, most secure and portable way for defining Variables in Airflow.

Hiding Sensitive Variable Values

For some of the methods outlined in the previous section, I mentioned that sensitive information can actually be visible on the User Interface. In fact, it is actually possible to hide sensitive values, as far as your variables are properly named.

If a variable name contains certain keywords that can possibly indicate that the variable holds sensitive information, then its value will automatically be hidden.

Here’s a list of keywords that will make a Variable qualify for having sensitive information store as its value:

access_token

api_key

apikey

authorization

passphrase

passwd

password

private_key

secret

token

keyfile_dict

service_accountThis means that if your variable name contains any of these keywords, Airflow will handle its value accordingly. Now let’s go ahead and try out an example to verify that this functionality works as expected.

First, let’s create a new variable without including any of the keywords mentioned above. As we can observe, the value of the variable is visible on the User Interface.

In the following screen, we attempt to create a new variable called my_api_key. According to what we discussed earlier, since the variable name contains api_key keyword, Airflow should handle its value in a way that it protects sensitive information.

In fact, if we now head back to the Variable list on the UI, we can now see that the value of the newly created variable is hidden.

If you are not happy with the existing list of keywords, you can in fact extend it by specifying additional keywords that can be taken into account when hiding Variable values. This can be configured through sensitive_var_conn_names in airflow.cfg (under [core] section), or by exporting AIRFLOW__CORE__SENSITIVE_VAR_CONN_NAMES environment variable.

_

sensitive_var_conn_names_– New in version 2.1.0.

A comma-separated list of extra sensitive keywords to look for in variables names or connection’s extra JSON.

_Type:

stringDefault:''Environment Variable: AIRFLOW__CORE__SENSITIVE_VAR_CONN_NAMES_

Efficient Variable Retrieval

By default, your Airflow DAGs are parsed every 30 seconds. The scheduler will scan your DAG folder and identify any changes made to the DAG files. If you are not fetching variables the right way, DAG parsing process may soon become a bottleneck.

Depending on the method you choose to declare variables, Airflow may have to initiate a connection to the metastore database for every variable declared in your DAG files.

Avoid overloading metastore with requests

In order to retrieve variables in your DAGs, there are essentially two approaches you can follow:

- Using

Variable.get()function - Using the

vartemplate variable

If you have chose to go with first option, Variable.get() will create a new connection to the metastore database in order to infer the value of the specified variable. Now the place where you call this function in your DAG file can have a huge performance impact.

Bad Practice

If the function gets called outside of a task, or within the DAG Context Manager, a new -useless- connection to the metastore will be created every time the DAG is parsed (i.e. every thirty seconds).

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

my_var = Variable.get('my_var')

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_var',

python_callable=lambda: print(my_var),

)If the same pattern exists in many different DAGs, then sooner or later, the metastore database will run into troubles.

There are in fact some edge cases where this pattern cannot be avoided. For example, let’s assume you want to dynamically create tasks and based on the value of a variable. Well, in that case you may have to call the function outside of the tasks, or within the Context Manager. Make sure to avoid this approach when possible though.

It is also important to mention that the same problem will occur, even if you call Variable.get() function in the arguments of an operator.

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

def _print_var(my_var):

print(my_var)

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_var',

python_callable=_print_var,

op_args=[Variable.get('my_var')],

)In fact, this can easily be avoided with the use of the template engine.

Best Practices

Basically, instead of calling Variable.get(), we can actually use templated references. With this technique, the value of a variable will only fetched at runtime.

The code snippet below demonstrates how to use templated references for variables that either JSON or non-JSON values.

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

def _print_var(val1, val2, val3, val4):

print(val1)

print(val2)

print(val3)

print(val4)

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_var',

python_callable=_print_var,

op_args=[

'{{ var.value.my_var }}',

'{{ var.json.my_vars.key1 }}',

'{{ var.json.my_vars.key2 }}',

'{{ var.json.my_vars.key3 }}',

],

)However, the template engine approach is applicable only to cases where the operator supports templated fields for the arguments we are looking to provide templated references.

In cases where templated references won’t work, you can still make sure that Variable.get() gets called within the task, so that no connections to the metastore database are initiated every time a DAG is parsed.

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

def _print_var():

my_var = Variable.get('my_var')

print(my_var)

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_var',

python_callable=_print_var,

)Storing multiple values in a single Variable

Now let’s assume that a particular DAG needs to retrieve three different values. Even if you follow the best practices outlined in previous section, you will still have to initiate three distinct connections to the metastore database.

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

def _print_vars():

my_var = Variable.get('my_var')

another_var = Variable.get('another_var')

one_more_var = Variable.get('one_more_var')

print(my_var)

print(another_var)

print(one_more_var)

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_vars',

python_callable=_print_vars,

)Instead, we could create a single JSON Variable consisting of three key-value pairs. Obviously, you should do this as far as it logically makes sense to squeeze the three values into one variable.

We can now retrieve the values from all keys specified in our variable with just one connection to the metastore database.

from datetime import datetime

from airflow import DAG

from airflow.models import Variable

from airflow.operators.python import PythonOperator

def _print_vars():

my_vars = Variable.get('my_vars', deserialize_json=True)

print(my_vars['key1'])

print(my_vars['key2'])

print(my_vars['key3'])

with DAG('my_dag', start_date=datetime(2024, 1, 1)) as dag:

print_var = PythonOperator(

task_id='print_vars',

python_callable=_print_vars,

)Final Thoughts..

Airflow Variable declaration is straightforward but their retrieval could turn into a nightmare for the metastore database, in case you don’t apply best practices.

In this tutorial, we demonstrated how one can create Airflow Variables using six different ways. Every approach comes with its pros and cons and should be used accordingly. Bear in mind that the best practice for production deployments is the use of a Backend Secret since it provides security and portability.

More importantly, we discussed which techniques should be avoided in order not to overload Airflow database as well as how to make optimal use of the Variables construct. I hope it is now clear to you that variables should either be inferred via templated references, or within the task function definition.